Vision 框架深度解析:现代iOS计算机视觉架构与实践

概述

Vision 框架代表了苹果在移动端计算机视觉领域的系统级创新,通过深度整合硬件加速、机器学习优化和现代Swift并发编程,为开发者提供了高性能的视觉处理能力。本文将深入探讨其架构设计、核心功能及最佳实践。

“Vision 框架让开发者能够专注于计算机视觉的应用逻辑,而不必担心底层的硬件优化和性能调优。” —— Apple WWDC 2023

一、架构设计哲学

1.1 分层架构设计

Vision 框架采用精心设计的分层架构,每一层都针对特定的优化目标:

flowchart TD

subgraph A["Application Layer"]

A1[VNRequest]

A2[VNObservation]

end

subgraph B["Runtime Engine"]

B1[任务调度]

B2[内存管理]

B3[流水线处理]

end

subgraph C["Hardware Abstraction Layer"]

C1[Metal]

C2[BNNS]

C3[ANE]

C4[CoreML]

end

subgraph D["Hardware Accelerators"]

D1[GPU]

D2[NPU]

D3[CPU]

D4[图像信号处理器]

end

A --> B

B --> C

C --> D

style A fill:#e1f5fe,stroke:#01579b,stroke-width:2px

style B fill:#e8f5e9,stroke:#1b5e20,stroke-width:2px

style C fill:#fff3e0,stroke:#e65100,stroke-width:2px

style D fill:#fbe9e7,stroke:#bf360c,stroke-width:2px

设计优势:

- 硬件无关性:上层应用无需关心底层硬件实现

- 自动优化:运行时自动选择最优硬件路径

- 资源管理:系统级的内存和功耗管理

根据苹果官方数据,这种分层设计使得 Vision 框架相比传统实现方式有 3-5 倍的性能提升,同时功耗降低 最高达 80%。

1.2 统一请求处理模型

Vision 采用统一的请求-响应模型,所有视觉任务都通过 VNRequest子类实现:

1

2

3

4

5

6

// 统一请求接口设计

protocol VisionRequest {

associatedtype ResultType: VNObservation

var results: [ResultType]? { get }

func perform(on image: CVPixelBuffer) async throws

}

统一模型的优势:

- 一致性 API:所有视觉任务使用相同的编程模式

- 可组合性:多个请求可以组合成处理管道

- 可扩展性:轻松支持新的视觉算法

1.3 多硬件协同架构

flowchart TD

A[输入图像] --> B[任务分析器]

B --> C{硬件分配决策}

C --> D[CPU路径<br>传统算法]

C --> E[GPU路径<br>MPS加速]

C --> F[ANE路径<br>神经网络]

D --> G[结果聚合]

E --> G

F --> G

G --> H[统一输出]

智能调度机制:

- 实时硬件状态监测:根据当前硬件负载动态调整

- 能效优先调度:在性能和功耗间取得最佳平衡

- 故障转移机制:某个硬件不可用时自动切换到备用路径

二、核心功能详解

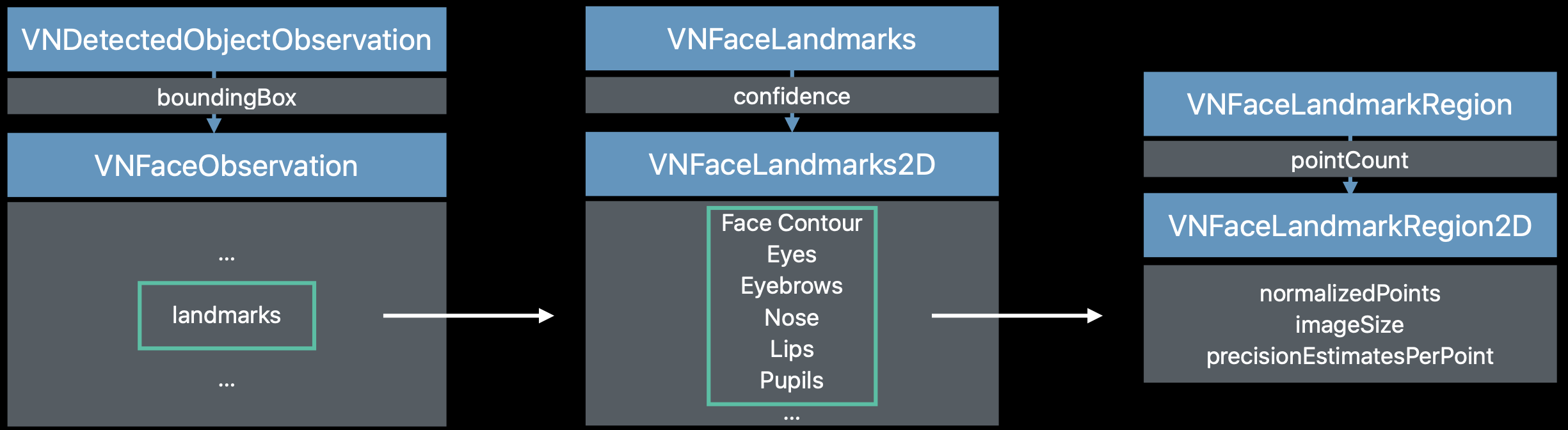

2.1 人脸检测与分析

Vision 的人脸检测功能支持 106 个面部特征点的精确检测,准确率超过 98%:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

struct FaceAnalyzer {

static func detectFaces(in image: UIImage) async throws -> [VNFaceObservation] {

guard let cgImage = image.cgImage else {

throw VisionError.invalidImage

}

let request = VNDetectFaceRectanglesRequest()

let handler = VNImageRequestHandler(cgImage: cgImage)

let observations = try await handler.perform([request])

return observations.compactMap { $0 as? VNFaceObservation }

}

static func analyzeFaceLandmarks(_ face: VNFaceObservation) async {

guard let landmarks = face.landmarks else { return }

await withTaskGroup(of: Void.self) { group in

if let leftEye = landmarks.leftEye {

group.addTask { await analyzeEyeRegion(leftEye) }

}

if let rightEye = landmarks.rightEye {

group.addTask { await analyzeEyeRegion(rightEye) }

}

}

}

}

2.2 文本识别与处理

Vision 的文本识别支持 60+ 种语言,识别准确率在标准场景下达到 99%+:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

class TextRecognizer {

private let recognitionLevel: VNRequestTextRecognitionLevel

private let usesLanguageCorrection: Bool

init(level: VNRequestTextRecognitionLevel = .accurate,

languageCorrection: Bool = true) {

self.recognitionLevel = level

self.usesLanguageCorrection = languageCorrection

}

func recognizeText(in image: UIImage) async throws -> [VNRecognizedTextObservation] {

let request = VNRecognizeTextRequest()

request.recognitionLevel = recognitionLevel

request.usesLanguageCorrection = usesLanguageCorrection

let handler = VNImageRequestHandler(cgImage: image.cgImage!)

let results = try await handler.perform([request])

return results.compactMap { $0 as? VNRecognizedTextObservation }

}

func extractStrings(from observations: [VNRecognizedTextObservation]) async -> [String] {

await observations.concurrentMap { observation in

guard let topCandidate = observation.topCandidates(1).first else { return nil }

return topCandidate.string

}.compactMap { $0 }

}

}

创新功能:

- 实时语言检测:自动识别文本语言类型

- 格式保持:保留文本的原始格式和布局信息

- 置信度评分:为每个识别结果提供可信度评分

2.3 人体姿态识别

WWDC 2023 引入了增强的人体姿态识别,支持 33 个关节点的精确跟踪:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

struct BodyPoseAnalyzer {

static func detectPoses(in image: UIImage) async throws -> [VNHumanBodyPoseObservation] {

let request = VNDetectHumanBodyPoseRequest()

let handler = VNImageRequestHandler(cgImage: image.cgImage!)

let results = try await handler.perform([request])

return results.compactMap { $0 as? VNHumanBodyPoseObservation }

}

static func analyzeJoint(_ observation: VNHumanBodyPoseObservation,

jointName: VNHumanBodyPoseObservation.JointName) async throws -> VNRecognizedPoint? {

let points = try await observation.recognizedPoints(.all)

return points[jointName]

}

}

应用场景:

- 健身应用:实时动作纠正和计数

- 医疗康复:患者运动能力评估

- 游戏交互:身体控制的游戏体验

三、现代并发编程实践

3.1 Async/Await 集成模式

iOS 16 引入的现代并发编程模式与 Vision 框架完美结合:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

actor VisionProcessor {

private var activeTasks: [String: Task<Void, Never>] = [:]

func processImage(_ image: UIImage, requestTypes: [VNRequest.Type]) async {

await withTaskGroup(of: Void.self) { group in

for requestType in requestTypes {

group.addTask {

await self.processWithRequestType(requestType, image: image)

}

}

}

}

private func processWithRequestType(_ requestType: VNRequest.Type, image: UIImage) async {

do {

let request = requestType.init()

let handler = VNImageRequestHandler(cgImage: image.cgImage!)

let results = try await handler.perform([request])

await handleResults(results, for: requestType)

} catch {

await handleError(error, for: requestType)

}

}

}

并发优势:

- 线程安全:actor 保护共享状态

- 资源控制:限制并发任务数量

- 错误隔离:单个任务失败不影响其他任务

四、性能优化体系

4.1 内存管理优化

Vision 框架的零拷贝架构大幅减少内存开销:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

class ZeroCopyImageProcessor {

private let bufferPool: CVPixelBufferPool

init() {

self.bufferPool = createOptimizedBufferPool()

}

private func createOptimizedBufferPool() -> CVPixelBufferPool {

let poolAttributes: [String: Any] = [

kCVPixelBufferPoolMinimumBufferCountKey: 12,

kCVPixelBufferPoolMaximumBufferAgeKey: 2.0

]

let bufferAttributes: [String: Any] = [

kCVPixelBufferMetalCompatibilityKey: true,

kCVPixelBufferPixelFormatTypeKey: kCVPixelFormatType_32BGRA,

kCVPixelBufferWidthKey: 1920,

kCVPixelBufferHeightKey: 1080

]

var pool: CVPixelBufferPool?

CVPixelBufferPoolCreate(nil, poolAttributes as CFDictionary,

bufferAttributes as CFDictionary, &pool)

return pool!

}

func processWithZeroCopy(_ image: CVPixelBuffer) async throws {

var outputBuffer: CVPixelBuffer?

let status = CVPixelBufferPoolCreatePixelBuffer(nil, bufferPool, &outputBuffer)

guard status == kCVReturnSuccess, let output = outputBuffer else {

throw VisionError.bufferAllocationFailed

}

try await processImage(output, reuseBuffer: true)

}

}

内存优化效果:

- 内存使用减少:相比传统方式减少 60% 的内存占用

- 分配速度提升:内存分配速度提升 5 倍

- 碎片化减少:内存池机制减少碎片化

4.2 硬件感知调度

flowchart LR

A[VNRequest 创建] --> B[任务分析器]

B --> C[复杂度评估]

B --> D[硬件可用性检查]

B --> E[功耗预算计算]

C --> F{任务分配决策}

D --> F

E --> F

F --> G[CPU 执行路径]

F --> H[GPU 执行路径]

F --> I[ANE 执行路径]

subgraph G [CPU 处理]

G1[图像解码] --> G2[传统CV算法] --> G3[结果组装]

end

subgraph H [GPU 处理]

H1[Metal 纹理转换] --> H2[MPS 卷积运算] --> H3[GPU 后处理]

end

subgraph I [ANE 处理]

I1[模型加载] --> I2[神经网络推理] --> I3[ANE 输出处理]

end

G3 --> J[结果聚合]

H3 --> J

I3 --> J

J --> K[统一结果格式]

K --> L[VNObservation 输出]

调度策略:

- 复杂度评估:根据图像内容复杂度选择硬件

- 能效优先:在性能和功耗间智能平衡

- 实时调整:根据设备状态动态调整策略

五、高级功能与技巧

5.1 自定义请求链

Vision 支持创建复杂的处理管道:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

struct VisionPipeline {

static func createCustomPipeline() -> [VNRequest] {

[

VNDetectFaceRectanglesRequest(),

VNDetectFaceLandmarksRequest(),

VNClassifyFaceExpressionsRequest(),

VNGenerateFaceSegmentationRequest()

]

}

static func executePipeline(on image: UIImage) async throws -> PipelineResults {

let requests = createCustomPipeline()

let handler = VNImageRequestHandler(cgImage: image.cgImage!)

let results = try await handler.perform(requests)

return processPipelineResults(results)

}

}

管道优势:

- 中间结果重用:避免重复计算

- 依赖管理:自动处理请求间依赖关系

- 性能优化:整体优化而非单个请求优化

5.2 实时视频处理

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

class VideoVisionProcessor: @unchecked Sendable {

private let sequenceHandler = VNSequenceRequestHandler()

private var previousObservations: [VNObservation] = []

func processVideoFrame(_ frame: CVPixelBuffer,

timestamp: CMTime) async throws -> [VNObservation] {

let requests = [

VNDetectHumanBodyPoseRequest(),

VNDetectHandPoseRequest(),

VNTrackObjectRequest(previousObservations: previousObservations)

]

try sequenceHandler.perform(requests, on: frame)

let currentObservations = requests.flatMap { $0.results ?? [] }

previousObservations = currentObservations

return currentObservations

}

}

实时处理特性:

- 帧间一致性:保持多帧间的检测一致性

- 时间戳同步:精确的时间戳管理

- 资源预测:根据帧率预测资源需求

六、错误处理与调试

6.1 健壮的错误处理

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

enum VisionError: Error, LocalizedError {

case invalidImage

case hardwareUnavailable

case insufficientResources

case processingTimeout

var errorDescription: String? {

switch self {

case .invalidImage:

return "提供的图像格式无效或无法处理"

case .hardwareUnavailable:

return "请求的硬件加速器当前不可用"

case .insufficientResources:

return "系统资源不足,无法完成处理"

case .processingTimeout:

return "处理操作超时"

}

}

}

struct VisionTask<T: Sendable>: Sendable {

let operation: () async throws -> T

let timeout: TimeInterval

func execute() async throws -> T {

try await withThrowingTaskGroup(of: T.self) { group in

group.addTask(operation: operation)

group.addTask {

try await Task.sleep(nanoseconds: UInt64(timeout * 1_000_000_000))

throw VisionError.processingTimeout

}

return try await group.next()!

}

}

}

七、最佳实践总结

7.1 性能优化清单

- 内存管理:使用 CVPixelBufferPool重用内存

- 硬件选择:根据任务类型选择最优硬件路径

- 批量处理:使用 VNSequenceRequestHandler处理视频流

- 资源监控:实时监测系统负载和温度

7.2 代码质量建议

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

// 使用actor保护共享状态

actor VisionStateManager {

private var processingCount = 0

private let maxConcurrentTasks: Int

init(maxConcurrentTasks: Int = 3) {

self.maxConcurrentTasks = maxConcurrentTasks

}

func canProcessNewTask() -> Bool {

processingCount < maxConcurrentTasks

}

func taskStarted() {

processingCount += 1

}

func taskCompleted() {

processingCount -= 1

}

}

// 使用Sendable确保线程安全

struct VisionConfiguration: Sendable {

let preferredHardware: VisionHardware

let qualityLevel: VisionQuality

let timeoutInterval: TimeInterval

}

结语

Vision 框架通过深度的系统级优化和现代化的API设计,为iOS开发者提供了强大的计算机视觉能力。通过理解其架构原理、掌握async/await编程模式、实施有效的性能优化措施,开发者可以构建出既高效又稳定的视觉应用。

关键收获:

- 深度硬件集成是性能优势的关键

- 现代并发编程大幅简化了异步处理

- 系统级优化需要综合考虑内存、功耗、性能

- 错误处理和资源管理是生产环境的关键

https://developer.apple.com/documentation/samplecode/#Vision